This page contains helpful tips for working with software, especially the python programming language.

A table of contents for this page includes:

Python

How to get started on your personal computer with python, Anaconda, and environments

Here are four steps you can follow to start working with python:

- Download and install the Anaconda individual edition

- Anaconda/conda both installs python and creates a system by which you can expand python’s functionality through “packages” or “modules.” You can learn more about managing and installing packages here.

- If you’re given the option, you’ll likely want a 64 bit version (as compared to a 32 bit version). If given the option, use python 3, not python 2. python 2 is obsolete. Tim doesn’t have any experience with PyCharm, and so hasn’t chosen to install it himself.

- Before you proceed, review the Getting Started with Conda website.

- Anaconda/conda both installs python and creates a system by which you can expand python’s functionality through “packages” or “modules.” You can learn more about managing and installing packages here.

- Set up your conda environments

- This step is technically optional, but recommended before you dive into new projects. It is necessary if you plan to work with Jupyter Lab.

- Scientific computing with python is done using “packages” or “modules.” Some combinations of modules might be incompatible. Anaconda helps maintain mutually consistent modules, but environments take this a step further. By creating separate “environments,” you create different virtual workshops or sandboxes in which all the tools are designed to work well together, and not conflict.

- Some of the environments that the UI Glacier Dynamics Lab (and Tim personally) uses for his projects are hosted on github here.

- You can also learn more about conda environments below.

- This step is technically optional, but recommended before you dive into new projects. It is necessary if you plan to work with Jupyter Lab.

- Start coding

- Open either Spyder or Jupyter Lab to start an interface for coding pythong. More on these two options below. If you’re just getting started, Tim recommends Jupyter Lab.

- Open either Spyder or Jupyter Lab to start an interface for coding pythong. More on these two options below. If you’re just getting started, Tim recommends Jupyter Lab.

- Learning python

- If you’re new to programming and want to get started learning the python that’s necessary for foundational work in the geosciences, Tim recommends starting with the course Geo-Python from the University of Helsinki. The syllabus is excellent, there are videos with instructions, clear text descriptions, and exercises to practice what you’re learning. The Earth Analytics Bootcamp course from the CU Boulder Earth Lab also is excellent and has an associated online textbook for the course.

- Tim has also had students successfully teach themselves python through various online education platforms such as EdX, Coursera, Udemy, and Educative. Two courses that especially look good, and are targeted towards scientists (as compared to web developers) are here at Educative and here at Udemy. These courses don’t tend to be free (like Geo-Python and Earth Analytics Bootcamp above), but the structure and assignments might work well for some learners. The python website also provides useful tutorials that are helpful for beginners.

- Tim’s favorite go-to reference manual, with exhaustive examples and suggestions for coding if you’re not sure where to start are the SciPy lectures. This manual provides a consistent primer for what’s involved in python’s core functionality and the modules you’ll need to do science, but is more like reading a textbook than taking a classs. You can use the website itself or download the entire lecture set as a 600+ page PDF and scroll through it (Tim’s preferred method). To get going, read and work through Chap 1 (“Python scientific computing ecosystem”), Chap 2 (“The Python language” sections “First Steps,” “Basic types,” and “Control Flow”), and then all of Chap 4 (“NumPy”) and Chap 5 (“Matplotlib”). Don’t just skim these sections, but actually have Jupyter Lab or Spyder open and execute the code, experimenting along the way.

- Once you are past the basics, googling for answers is essential. Stack Overflow and Stackexchange are invaluable tools for professional, beginner, and enthusiastic programmers. Both are question and answer platforms used across the programming community to solve coding problems collaboratively. They will frequently come up in Google searches.

- For learning more advanced topics, such as solving PDEs and matrices, symbolic computing, etc., Tim has had a great experience with the book Numerical Python by Robert Johansson.

- If you’re new to programming and want to get started learning the python that’s necessary for foundational work in the geosciences, Tim recommends starting with the course Geo-Python from the University of Helsinki. The syllabus is excellent, there are videos with instructions, clear text descriptions, and exercises to practice what you’re learning. The Earth Analytics Bootcamp course from the CU Boulder Earth Lab also is excellent and has an associated online textbook for the course.

Environments: internally consistent sets of python modules

If you want to use python on kennicott, after you log in, type conda activate followed by the name of the environment you’d like to work in. You might want to append this to the end of your ~/.bashrc or ~/.bashprofile file, which is a text file that runs automatically every time you log into kennicott. Different sets of packages for different purposes are organized into specific “environments,” which are kept internally consistent. You can identify the available environments by typing conda env list.

In spring 2022, there are four main environments that are available to all users on kennicott: base22a (fundamental, basic scientific computing packages), seisenv22a (for the purpose of working with obspy and seismic data), spatialenv22a (for the purpose of working with spatial data, like shapefiles, geotiffs, and other rasters), and imgenv22a (for image processing with opencv, PIL, etc.). Tim expects that these environments will be updated in the spring and fall of each year, so that there will be an “a” and “b” version after the two digit year for each environment. Each of these environments is maintained on github as well, here, so you can suggest changes, or download them yourself to have consistent environments on personal and work computers. You can change to one of these environments by typing conda activate myenv, in which case you replace myenv with the name of the environment you want to use. If there are python modules you’d like to use that don’t fall in one of these categories, you can create your own environment, or clone an existing environment and then modify it for your purposes.

Preferred python interface

Our lab uses three different Integrated Development Environments (IDEs) or front-ends for interacting with python, and writing, running, debugging, and plotting tasks.

These IDEs have the ability to read/write both python (.py) scripts and jupyter notebooks (.ipynb). If working with notebooks, your figures can be made interactive if you use the appropriate matplotlib backend for your plots. To do so and produce zoomable/pannable, interactive plots, include the following line near the top of your python notebook file:

%matplotlib widget

This is an example of a Jupyter “magic”, and controls iPython operation. Other magics also control plotting, or work within the operating system.

The three python IDEs we use are:

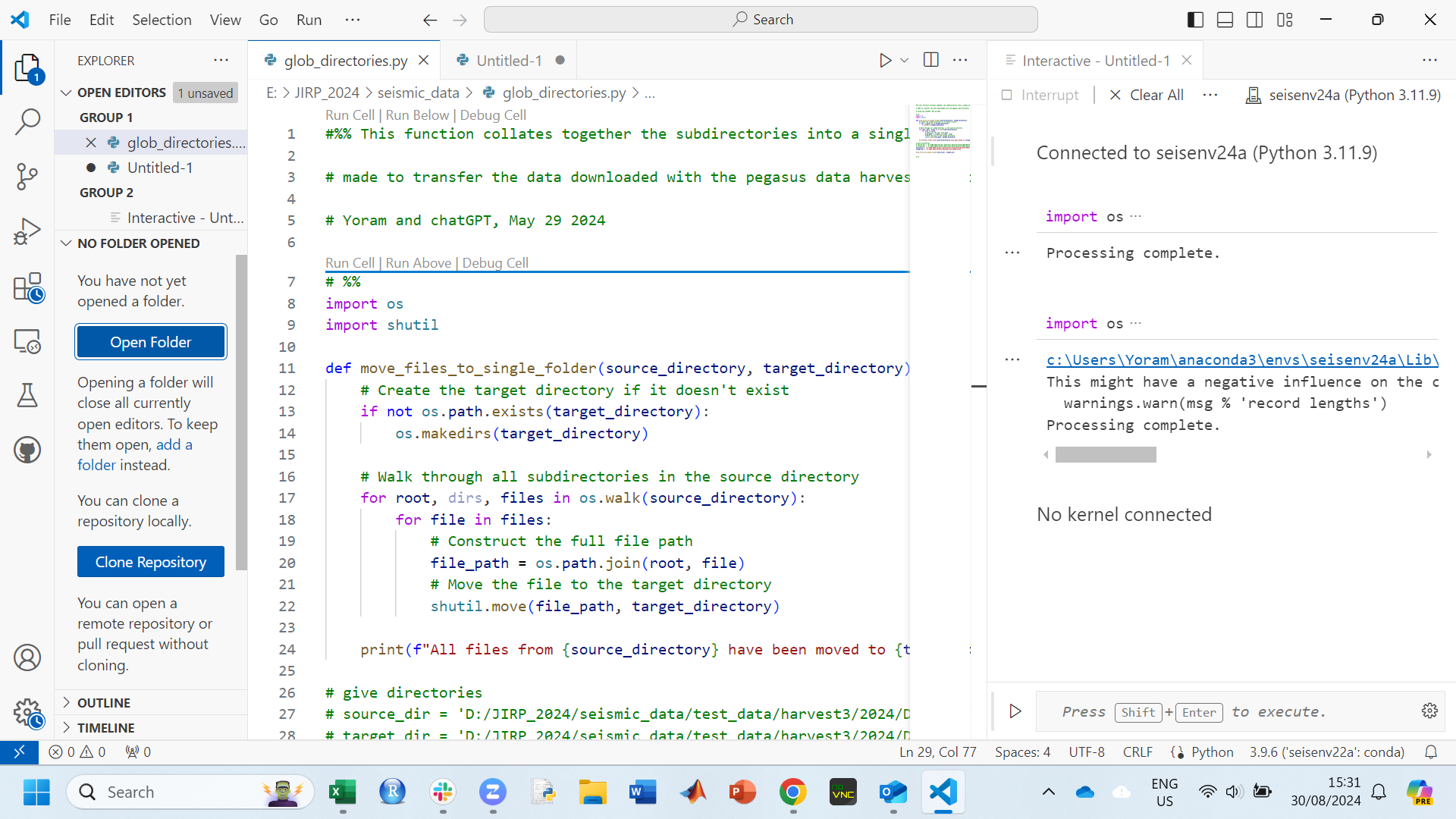

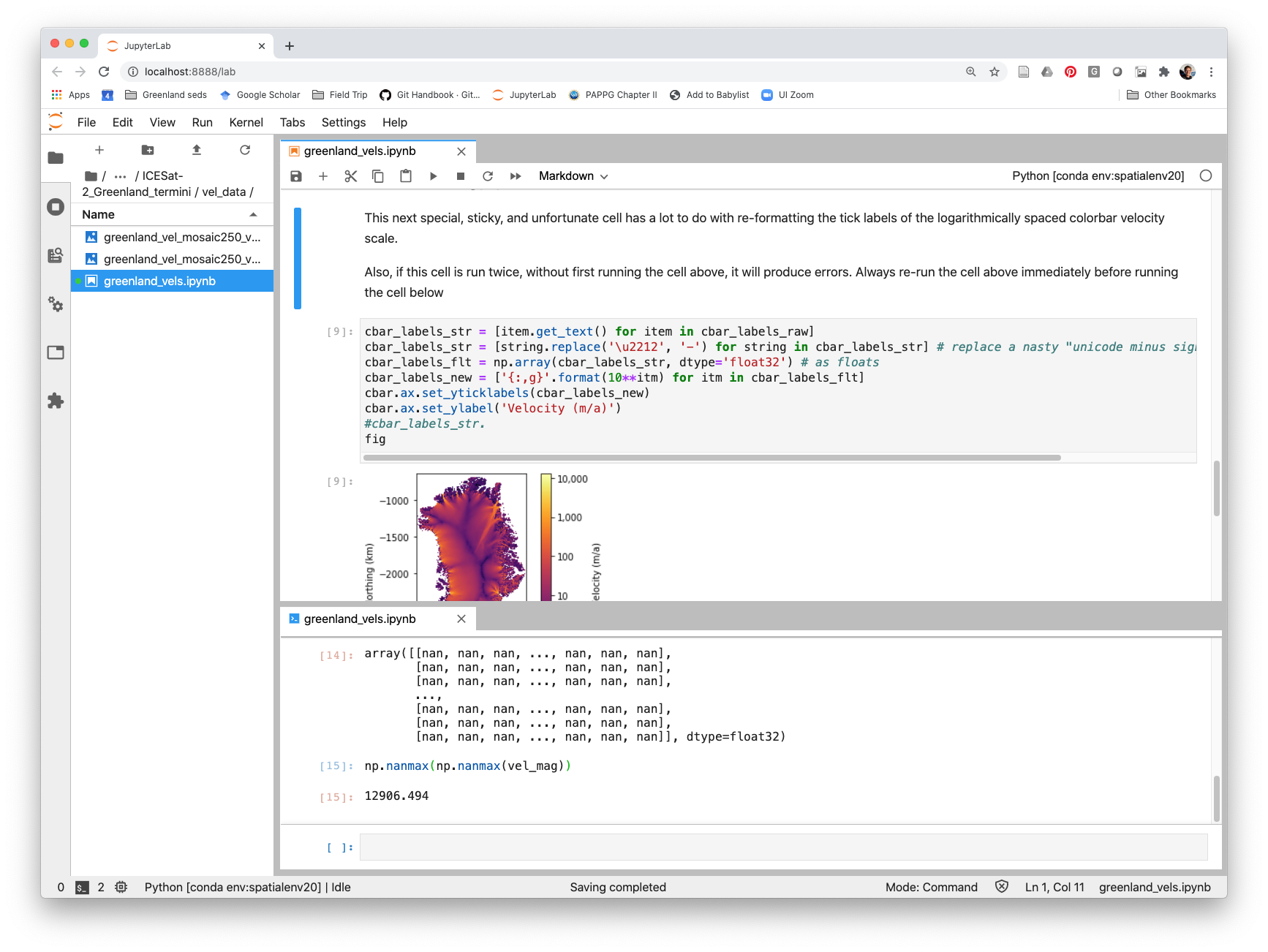

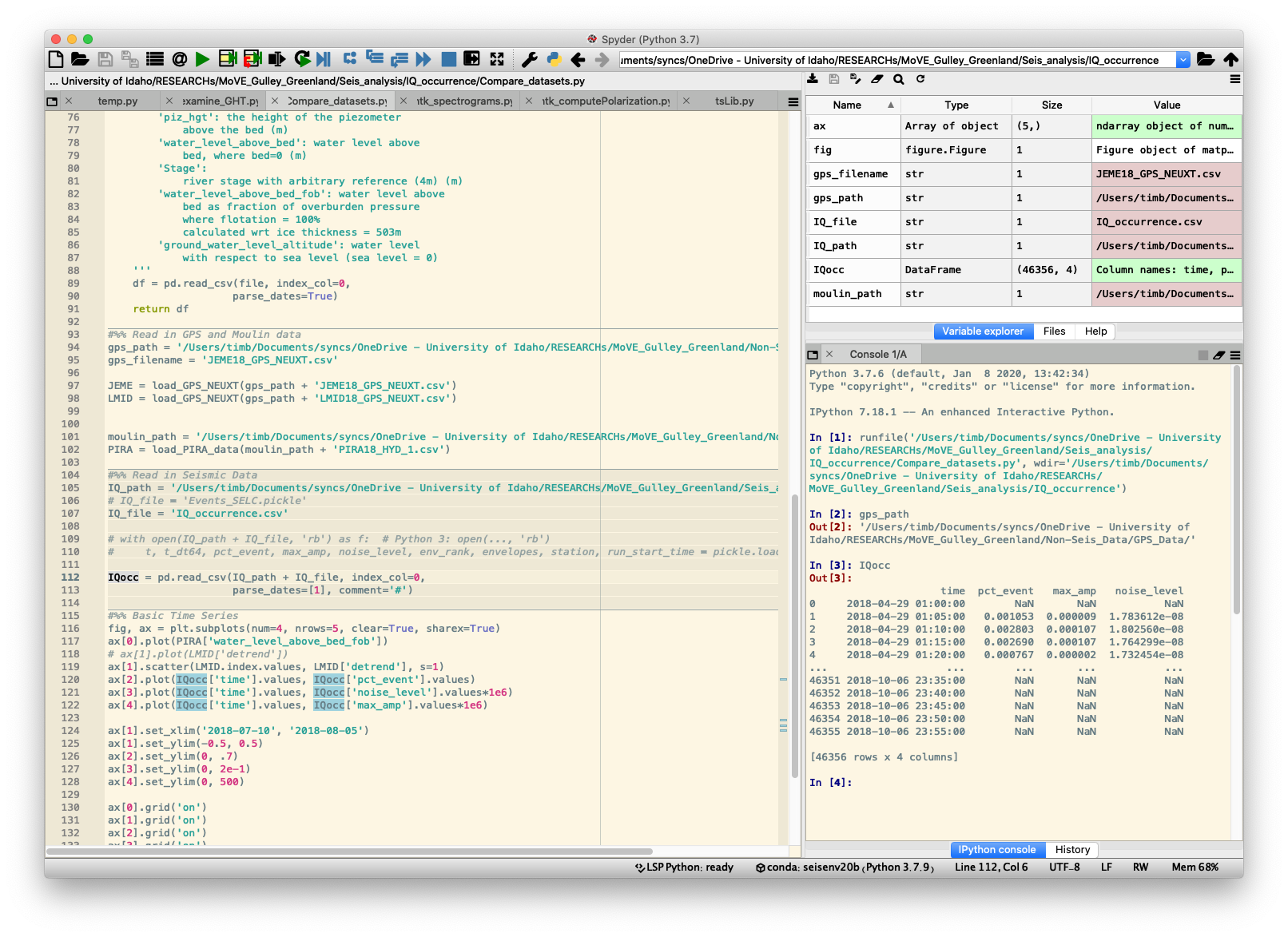

| VS Code | Jupyter Lab | Spyder |

|---|---|---|

| Visual Studio Code is a free software editor from Microsoft. It can be used to work on a series of different languages, inlcuding python. | Jupyter Lab runs in a web browser window and you can open it by typing jupyter lab at the terminal or Anaconda Prompt. |

You can open this program by typing spyder at the terminal or Anaconda Prompt window. |

| VS Code supports both Jupyter notebooks (.ipynb) and python scripts (.py). Another advantage is that it can be used to ssh into our server, kenicott, to work directly on scripts there. Tim recommends using VS Code. | Jupyter Lab is potentially more useful for data exploration, and it allows text and figures to be stored right with the code. | Spyder is generally a little better for writing complete, autonomous scripts, and writing out more complicated computing workflows. Spyder is very similar to the Matlab user interface. |

| Options for both step by step data exploration and more traditional script writing. | Rapidly growing development environment, on the ascendency for data scientists, with expanding popularity and functionality. | A traditional IDE, used by developers to write functions and scripts. |

| Writes, reads, and executes .py and .ipynb files, as well as non-python formats. | Used to write .ipynb files, although these files can be exported as py files. | Used to write .py files, although py files can work with Jupyter. |

| Versatile, however it does take some time to setup a working environment that suits each user. Things such as debugging require plugins, which need to be installed. | Produces python code with more formatting, bells and whistles, and self-contained because of embedded figures and text. | Produces python code that is potentially more portable, and easier to share with python beginners. |

|

|

|

Keeping a script running overnight (or other long periods)

You might try and take advantage of kennicott’s resources to run a script overnight, or for some other long period of time, then have the script write the output to some other kind of file, like text, csv, pickle, or npz. If logged into kennicott via ssh, sometimes, if the connection isn’t “active” in some way, you’ll get booted off of kennicott. And when you get booted off kennicott, the process (aka, application or script) that you’re running and controlling via the ssh connection will be “killed” when you apparently “hang up” the connection on kennicott. In that case, any work stored in memory is lost.

To get around the problem of hanging up on a process, 1) run the script directly from the terminal (rather than spyder), and 2) use the nohup bash command within the linux terminal window, to keep the script running in case of hangup, and 3) keep a close eye on the processes that you’re running. One example of this is a script Tim wrote to process seismic data. See the github README.md file for more information on this application, and the referenced python scripts for examples of how this works.

1) To execute a script from the terminal, rather than within spyder, you’ll have to let the computer know how to read the script by adding specific header information to the top of the script you want to run, such as

#!/opt/anaconda/envs/seisenv/bin python

# -*- coding: utf-8 -*-

In the above, case, the first line, known as a “shebang” indicates what specific conda environment the script should be run from.

2) An example of using nohup is

nohup python -u ./my_py_script.py > File_to_collect_output.log &

which will allow the my_py_script.py to run on kennicott and prevent the server from killing the process if the server becomes disconnected. The -u flag forces the text output of the python script to stdout (i.e., ‘standard out’, which is typically to a computer monitor), so that it can then be redirected (with >) to a plain text log file (File_to_collect_output.log – the log extension is arbitrary, and could be anything). Ending the line with an & moves the running command (aka, ‘a process’) into the background, so that the terminal window can still be controlled.

3) When you begin a command in the background, with &, at the terminal just below your command, you’ll see a 4 to 6 digit number. This number is the PID number, and is the number that the computer uses to track and identify the process you just launched. If you’re moving commands/processes into the background, you should be aware that some of these processes can become forgotten, zombie processes, that tie up computer resources and never actually quit or finishe running. If you start using nohup, it’s your responsibility to keep an eye on the system resources you’re using. You can view all the processes you’re responsible for by typing at the linux terminal: ps -x. This will show a list of processes attributed to you, their PID numbers, and the commands that generated these processes. To terminate a process and free up computer resources, use the command kill. For example, to end processes 2502 and 2507, type kill 2502 2507. top is another useful command that dynamically updates to show you all the processes that are running, who owns them, and what computer resources they’re consuming. Another way to use ps is ps -eo pid,etime,comm, that puts some focus on the elapsed time of each process.

Geographic information systems (GIS)

QGIS is a great tool for working with spatial data, like rasters and shapefiles. It’s free and has a lot of capability to view, manipulate, and explore spatial data. It is also the foundation for the packages QGreenland and Quantarctica. You can work with QGIS through two approaches:

- Download and install QGIS on your laptop or lab desktop, as appopriate, or,

- Run QGIS on our server, kennicott. Kennicott currently has version 3.20.3 - Odense - installed. To run it, you will need to:

- Log into the server. We recommend using vnc/remote desktop.

- SSH with the -Y flag after you are logged into the server:

ssh -Y USERNAME@kennicott.ibest.uidaho.edu. This step seems redundant, but is necessary. - Open QGIS with Singularity:

singularity run --bind /data:/data /data/singularity/qgis.focal.sif; - step 4 - Profit.

For QGIS on kennicott, some plugins are already installed (e.g. Profile Tool, QProf, QuickMapServices, QChainage). Unfortunately, Licensed plugins such as LAStools will not work, and Wine is not installed on the server.

Note that, while the software runs smoothly when SSHing from the university network, it will be a bit laggy when accessing remotely via a VPN.

The University of Idaho also has licenses to ESRI products, such as ArcGIS Pro, that are available.